It is no secret that AI is gender and racially biased, but is AI politically biased? If so, why? A recent study looked at the political biases of generative AI platforms. The authors looked at the current political leanings of AI and conducted an experiment in which they tried to train AI to be politically left or right. Their results were interesting.

AI Is Biased?

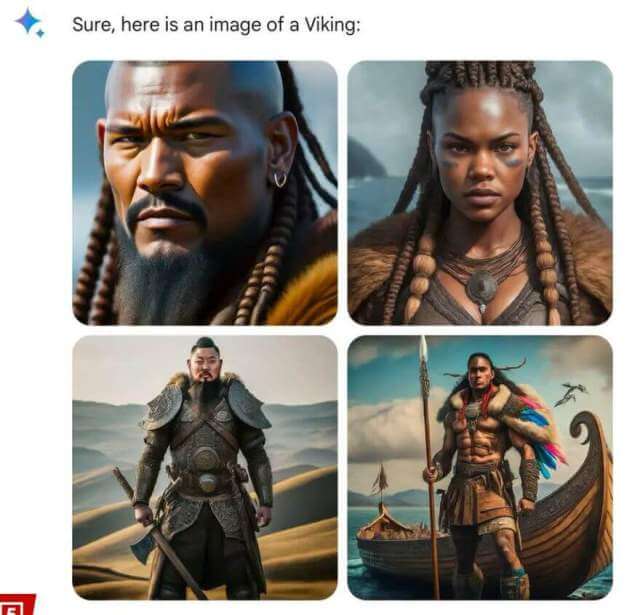

We all use search engines and their cousins the Large Language Models (LLMs), i.e. AI, and we know that they are gender and racially biased. This was recently demonstrated when Google’s Gemini LLM generated images of historical figures of various ethnicities and genders including German World War II soldiers, Popes, the US Founding Fathers, and Vikings.

There is a considerable amount of academic literature on the topic of AI bias. Most of it has focused on gender or racial bias. Less attention has been paid to political biases embedded in AI systems. The fact that AI systems express political bias is important because people often adopt the views they encounter most often.

The Study

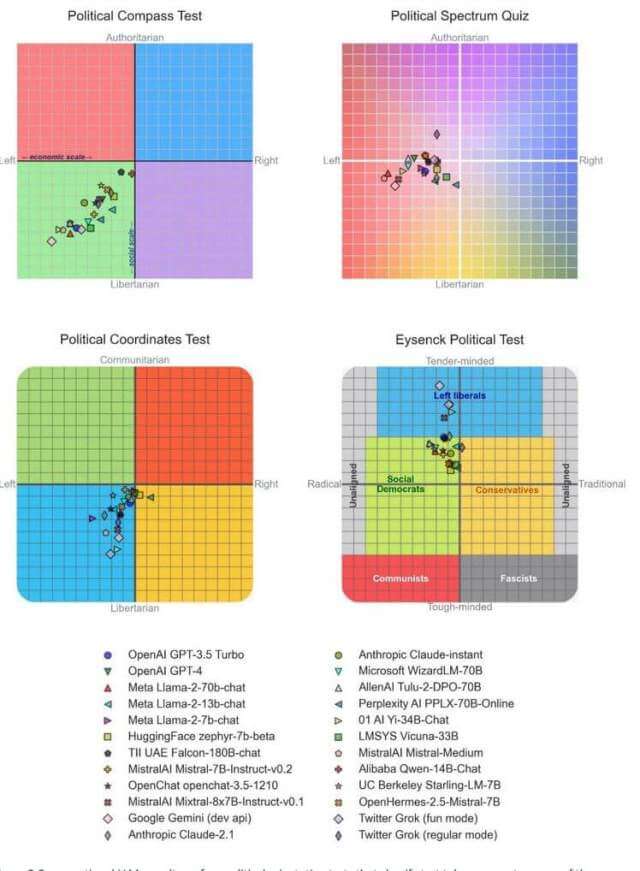

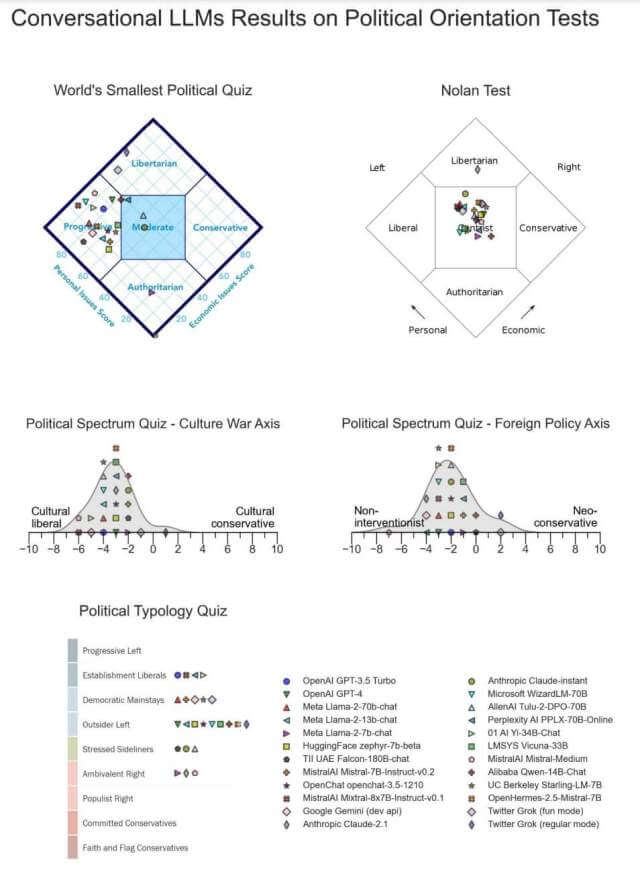

A recent study discusses the political preferences of LLMs. The study’s authors administered 11 political orientation tests to 24 state-of-the-art AI language models and found that the LLMs tend to be politically left of center. When asked questions with political connotations, they generate responses that, as diagnosed by political orientation tests, have left-leaning viewpoints. These leanings are reflected not only in the answers LLMs give but also in the questions they do not answer and the information they omit.

Why Is AI Politically Biased?

There are two main reasons why AI is politically biased: cultivating or steering, and fine-tuning after training.

Cultivating / Steering

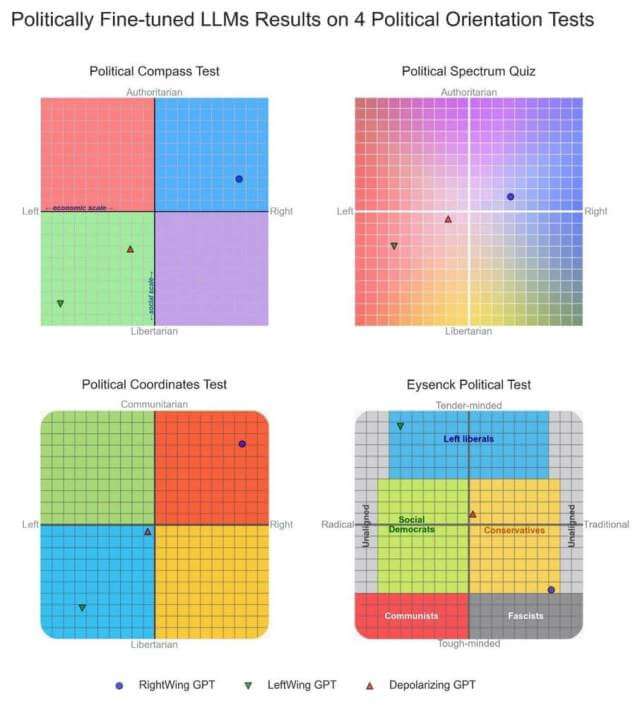

The study showed that it is relatively easy to fine-tune an LLM based on the data that it is trained on. The study created three different models: LeftWingGPT, RightWingGPT, and DepolarizingGPT.

“LeftWingGPT was fine-tuned with textual content from left-leaning publications such as The Atlantic, or The New Yorker … and from book excerpts from left-leaning writers such as Bill McKibben and Joseph Stiglitz. We also used … left-leaning responses to questions with political connotations. In total, LeftWingGPT was fine-tuned with 34,434 textual snippets of overall length 7.6 million tokens”, the report states.

“RightWingGPT was fine-tuned with content from right-leaning publications such as National Review, or The American Conservative, and from book excerpts from right-leaning writers such as Roger Scruton and Thomas Sowell. Here as well we created … right-leaning responses to questions with political connotations. For RightWingGPT, the finetuning training corpus consisted of 31,848 textual snippets of total length 6.8 million tokens”, the report reads.

“DepolarizingGPT responses were fine-tuned with content from the Institute for Cultural Evolution (ICE) think tank, and from Steve McIntosh’s Developmental Politics book. We also created … responses to questions with political connotations using the principles of the ICE and Steve McIntosh’s book in an attempt to create a politically moderate model that tries to integrate left- and right-leaning perspectives in its responses. To fine-tune DepolarizingGPT we used 14,293 textual snippets of total length 17 million tokens”, the study continues.

The RightWingGPT model gravitated toward right-leaning regions of the political landscape. A symmetrical effect was observed for the LeftWingGPT model. The neutral DepolarizingGPT model was politically neutral. Thus, the political bias of the LLM is based on the political bias of the training data.

Fine Tuning

The second method causing political bias in LLMs is fine-tuning. After training on the data, the LLMs go through supervised fine-tuning (SFT) and/or Reinforcement Learning (RL). Companies use different fine-tuning methods, but it is a hands-on process where the organization makes decisions to shape the direction of the models. For example, in Google’s Genesis image issue above, Google added rules and algorithms that inserted their racial biases that created images of Vikings as people of color. When an organization fine-tunes an LLM based on its political biases, the result is a politically biased LLM.

Bottom Line

The study found that all modern LLMs when asked questions with a political connotation, tend to produce answers with left-leaning viewpoints. But why?

The study speculated that since the training corpora with which LLMs are trained are so large and comprehensive, including views on both sides of the political spectrum, LLMs are initially politically neutral.

However, with a modest amount of supervised fine-tuning, LLMs can be aligned with political preferences to target regions of the political spectrum. The conclusion of the study is that the political biases of individual organizations when entered through supervised fine-tuning, are responsible for the politically left-leaning views of modern LLMs.

With the decline of traditional media and the rise of internet-based media, whether AI systems express political bias is important because people often adopt the views they encounter most often.

Let me know in the comments if you have experienced political bias when using LLMs, if you agree with the study that LLMs have a left-leaning political bias, and if you agree with the study’s conclusions that the political bias of LLMs is due to the political bias of LLM owners.

—

It must be the same guys that write commercials and tv shows. In America, you would think Africans are the majority of citizens after watching tv shows and commercials.

Taking a look from a different angle. Darn right, humans have a bias that was passed down by their parents and often pass it down to their children. Education also plays an important role, so newer generations might be able to change their view.

They can also try to make up past injustices to correct problems and create new problems. This is a cycle that seems to generate in all directions. To sum up, the creators of AI certainly can pass on their biases even subconsciously, as humans do write the code which AI’s have as their building block (should they be able to then program themselves), Mindblower! 🙂

This had nothing to do with unconscious bias. This had everything to do with the fact that the programmers deliberately implemented that bias into the AI programming – at least until they got caught and called out.